Anthropic’s Claude is not the shiny new child on the AI block anymore; it is now one of many MVPs, proper up there with ChatGPT and Perplexity.

Every bot brings one thing totally different to the desk: ChatGPT is the artistic one, nice for writing and brainstorming; Perplexity acts like your analysis assistant, extracting real-time, source-backed data; and Claude has been hailed because the considerate one, Socratic virtually.

Its the mannequin that is greatest at breaking down complicated concepts and reasoning by means of multi-step issues. It is also identified for its calm, well-structed responses that really feel just a little extra like speaking to a cautious analyst than a chatbot.

So I made a decision to place the declare to the check. Can Claude’s Sonnet 4.5 actually assume its approach by means of my prompts, or does it simply sound convincing whereas doing it?

Tech decision-making

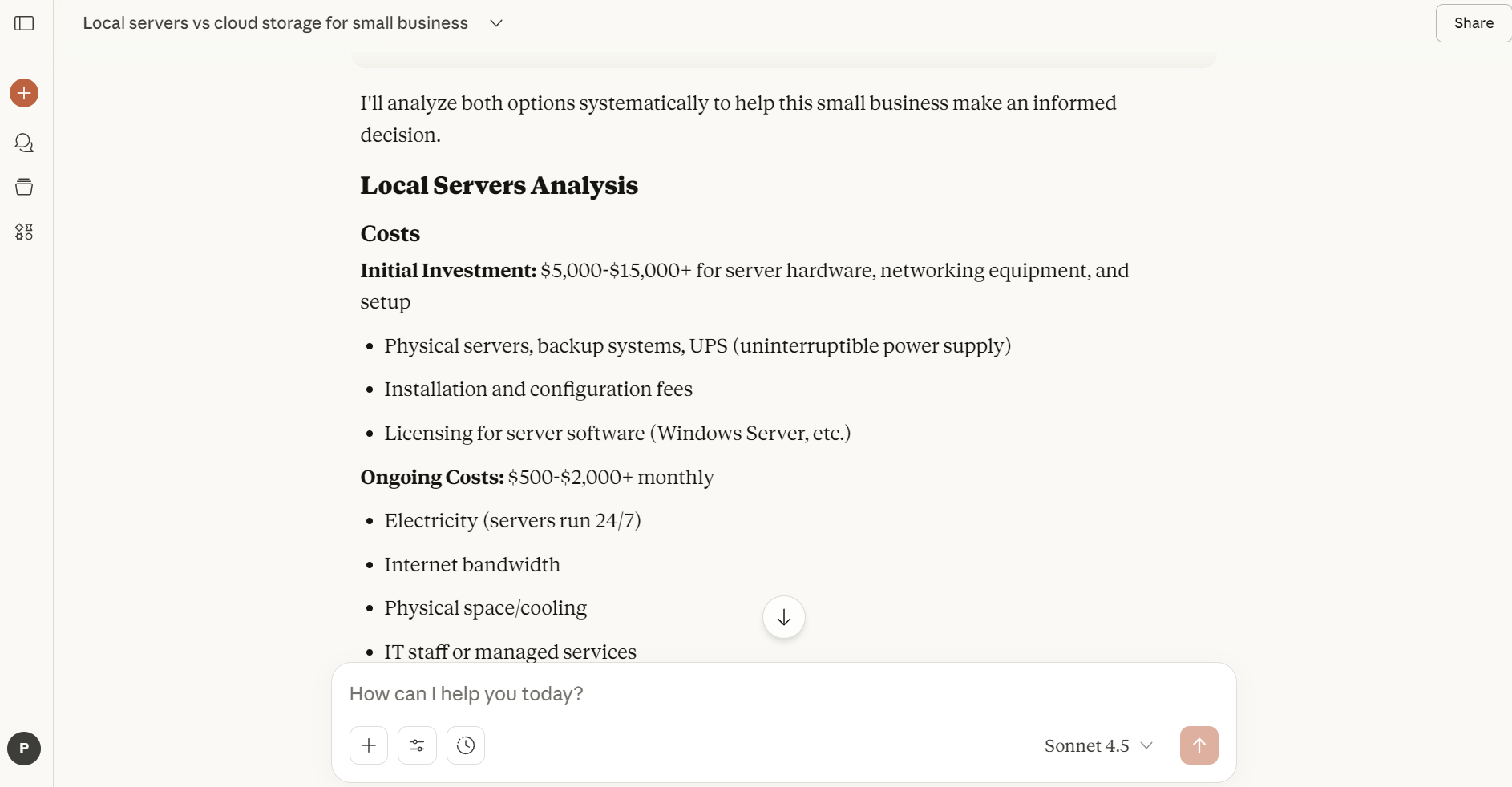

Prompt one: “You’re advising a small business choosing between setting up local servers or using a cloud service for data storage.

Analyze both options step-by-step — include costs, security, scalability and maintenance — and recommend the best approach with reasoning.”

Here’s what I thought Claude did well:

It provided systematic structure, having broken down the problem into clear sections: ‘Costs, Security, Scalability, Maintenance.’ It then provided subheadings, pros/cons and even a 3-year cost estimate.

Its step-by-step reasoning explained trade-offs between local servers and cloud; and weighed financial, operational, and security factors.

Claude also recommended with rationale, in that it didn’t just give an answer; it explained why cloud is generally better for most small businesses.

Its actionable next steps suggested an action plan with concrete steps (calculate storage needs, pilot programs, staff training).

From this prompt alone, it’s clear that Claude can balance depth with readability, and despite giving a lot of detail, it was still easy to follow.

It doesn’t just give an opinion, it analyzes, weighs pros and cons, and explains decisions logically.

Multi-step troubleshooting

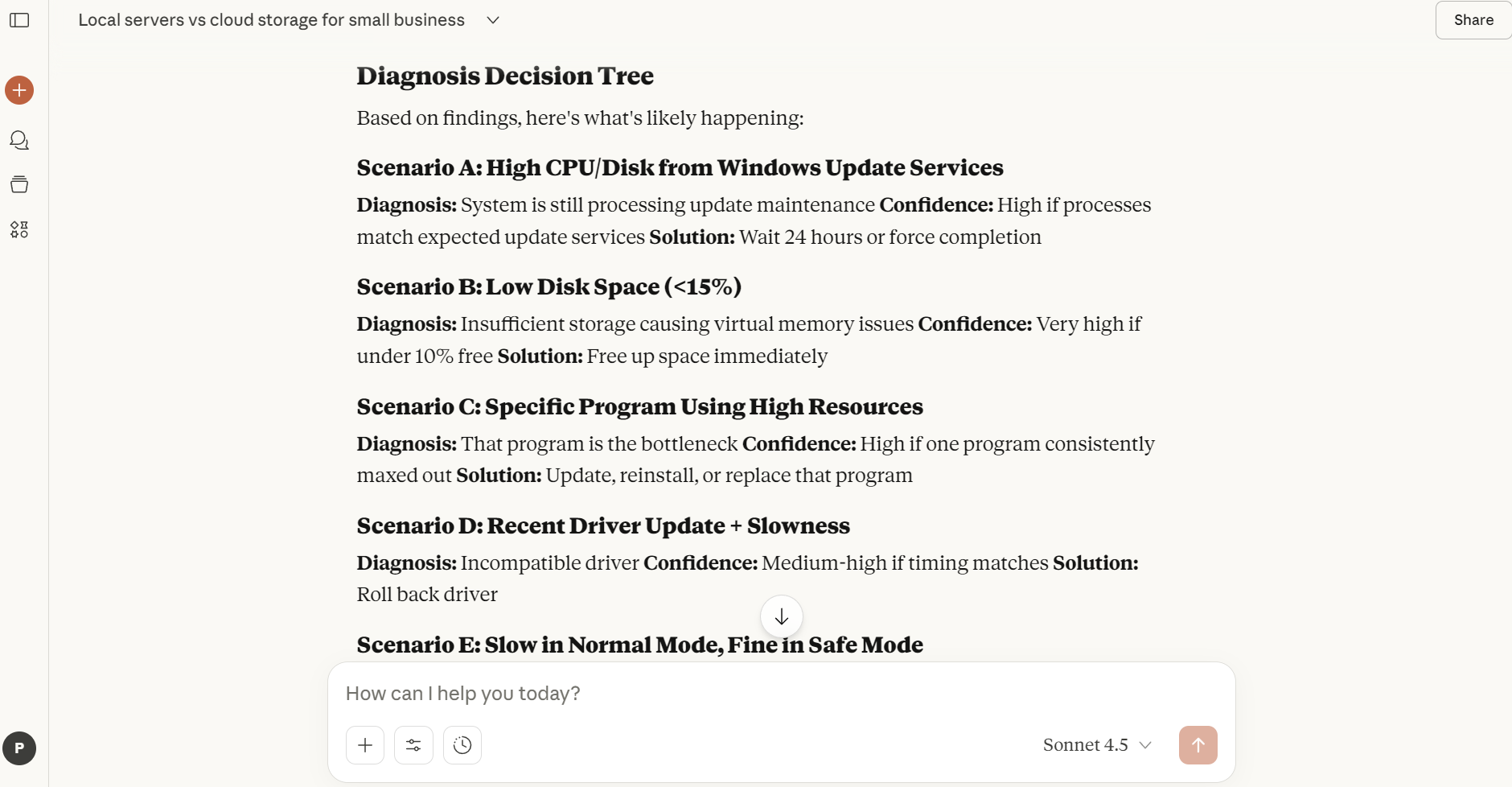

Prompt two: “A user’s laptop is running slow after a recent update. Walk through a detailed diagnostic process – step by step – explaining what you’d check first, why, and how you’d confirm the cause before suggesting fixes.”

At first glance, this response was overwhelmingly lengthy. As I was scrolling down to the bottom of the result, it was still ‘thinking.’ And some recommendations assume technical literacy, which, for the average user may require translation and or asking follow-ups.

While unapologetic about its word length, it did ensure every instance was thoroughly analyzed for both Windows and Mac users. Within its (very elaborate) 9-step approach, Claude also created a decision tree of scenarios including diagnosis, confidence level, and recommended solution, which demonstrates reasoning rather than just generic advice.

In spite of its verbosity, it logically hypothesised each potential issue, linked causes to effects,and provided actionable solutions, demonstrating even procedural reasoning in full force.

Strategic event planning

Prompt three: “Organize a three-day tech conference for 500 attendees. Plan sessions, speaker lineup, logistics, budget, and contingency measures. Explain your reasoning for scheduling, resource allocation, and prioritization of activities.”

Claude’s response to event planning showcases its strengths in complex, multi-step reasoning once again. It prduced a fully-structured three-day tech conference plan that covers scheduling, speaker selection, logistics, budgeting, and even contingencies.

Along the way, it justifies decisions. For example, scheduling keynotes in the AM to capture attention, limiting workshops to 50 participants for higher engagement and budgeting for backup speakers and failures (something most people fail to account for). The plan even includes prioritization tiers and risk management strategies, demonstrating foresight.

But while all of the above shows where it shines, the level of detail, from minute-by-minute sessions to exact budget percentages can yet again become overstimulating. This underscores the trade-off between thoroughness and readability.

Overall, however, Claude delivered a comprehensive, actionable plan that lives up to its complex reasoning skills.

Environmental decision-making

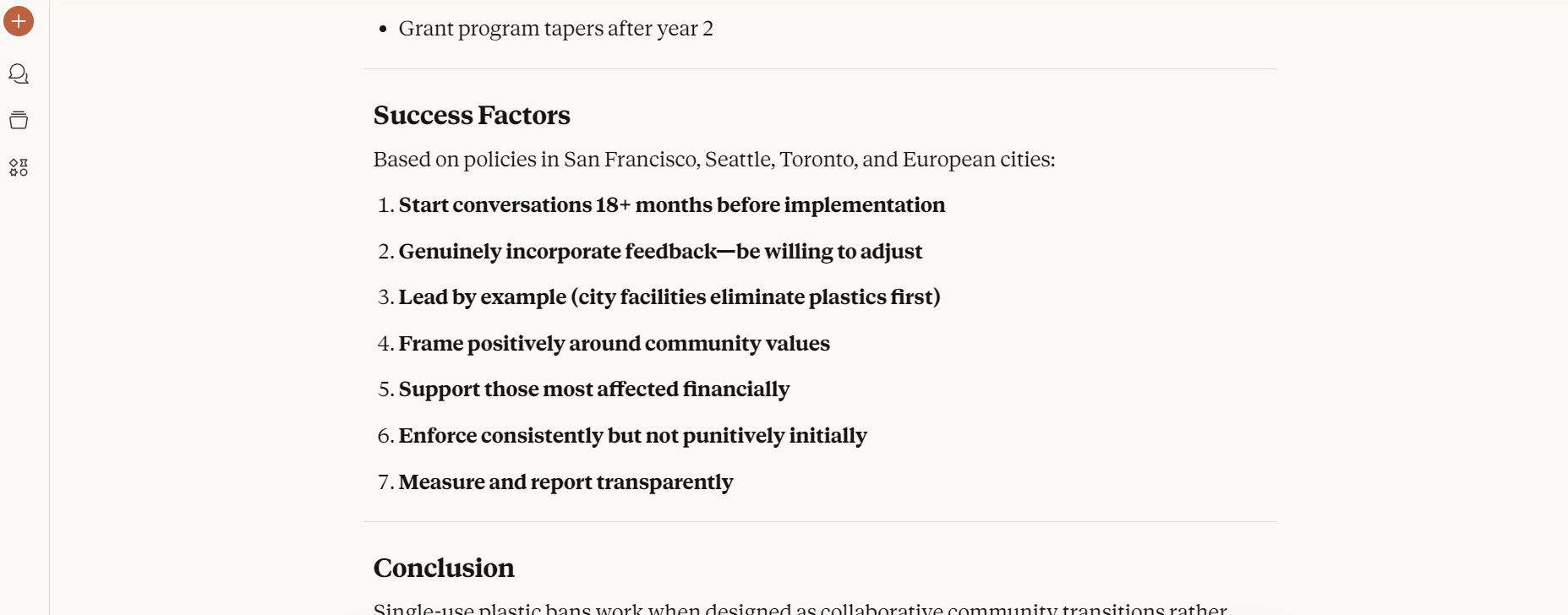

Prompt four: “A city is considering implementing a ban on single-use plastics. Advise the city council on a step-by-step policy plan, including stakeholder engagement, economic impact analysis, public communication strategy, enforcement mechanisms, and potential unintended consequences. Explain your reasoning for each recommendation.”

Claude’s response to the plastics ban prompt reads less like a chatbot’s output, and more like a full policy whitepaper.

It approaches the problem like a consultant, methodically mapping stakeholders, budgeting for eco impacts, and even anticipating contingencies such as accessibility issues and loopholes.

In terms of structure, it’s very comprehensive, with clearly reasoned real-world examples, noting San Francisco, Seattle, Toronto and several European cities to ground its reasoning in proven practice (per the image above).

However, it teeters on overengineered at times, given the level of procedural and financial detail. It’s enough to overwhelm a casual user just looking for a summary.

Still, for complex policy reasoning, this is Claude at its best: structured, anticipatory, and context-aware, delivering analysis that feels ready for a city council briefing.

Bottom line

Overall, Claude showed impressive depth and structured reasoning across all prompts; though its tendency toward over-explaining sometimes slowed the delivery of key insights.

It almost feels like Claude’s wearing a monocle every time it delivers an answer, and you don’t want to interrupt it.

Comply with Tom’s Guide on Google News and add us as a preferred source to get our up-to-date information, evaluation, and critiques in your feeds.

Extra from Tom’s Information

Again to Laptops