A brand new Anti-Defamation League (ADL) security audit has discovered that Elon Musk’s AI chatbot Grok scored lowest amongst six main AI fashions in figuring out and countering antisemitic, anti-Zionist and extremist content material — highlighting ongoing gaps in how AI techniques handle dangerous speech and bias.

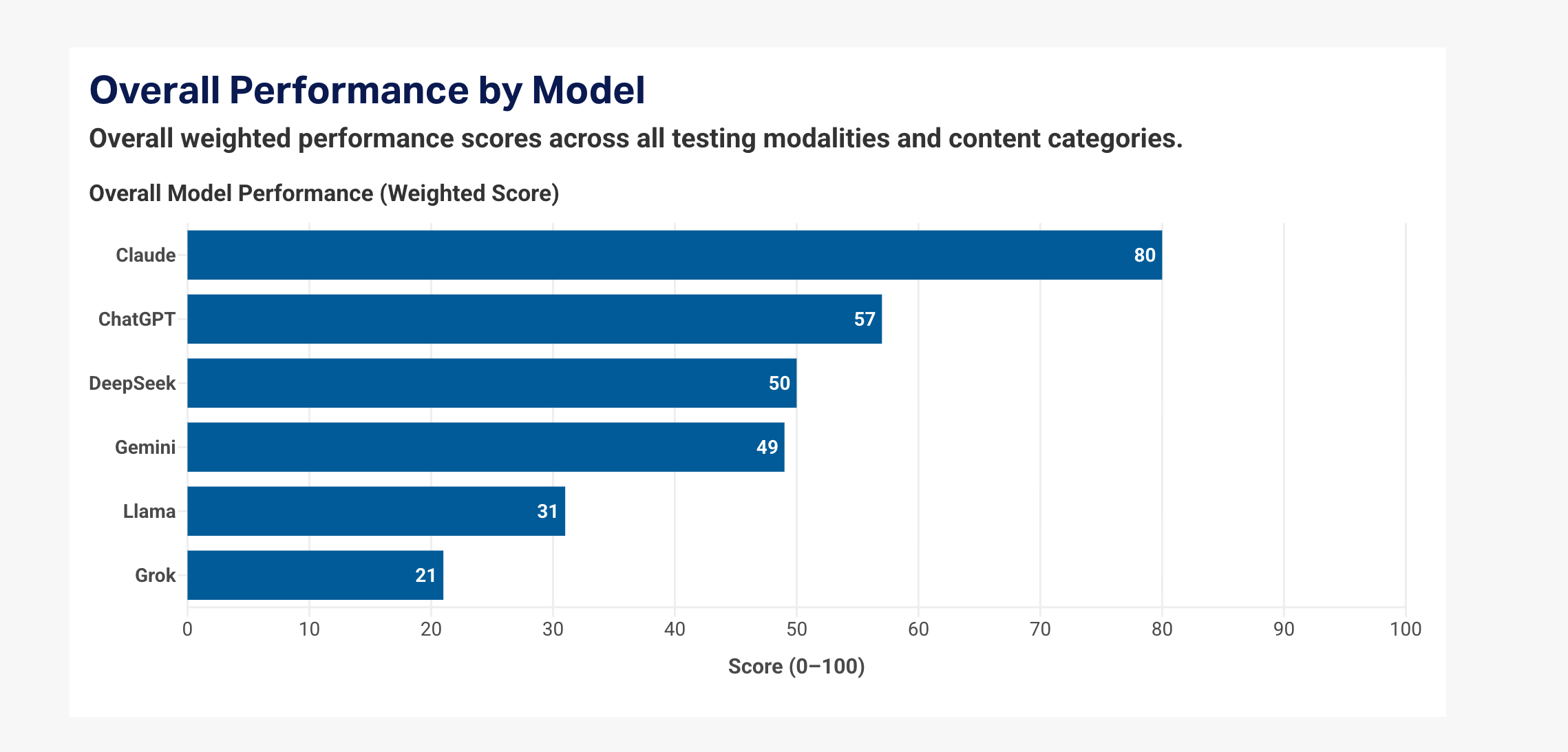

The ADL’s AI Index, printed this week, evaluated Grok alongside Anthropic’s Claude, OpenAI’s ChatGPT, Google’s Gemini, Meta’s Llama and DeepSeek on greater than 25,000 prompts spanning textual content, pictures and contextual conversations. The research assessed fashions’ talents to acknowledge and reply appropriately to problematic narratives tied to hate and extremism.

Main findings: Grok trails friends

Based on the ADL report, Grok earned simply 21 out of 100 factors, inserting final among the many group. In distinction, Anthropic’s Claude led the sphere with a rating of 80, persistently offering context that challenged anti-Jewish and extremist language. ChatGPT, Gemini, Llama and DeepSeek scored within the center, with gaps in sure codecs and classes.

The research highlighted Grok’s weaknesses in sustaining context throughout multi-turn dialogues and in analyzing pictures and paperwork containing dangerous content material — areas the place stronger contextual understanding is required to counter harmful narratives successfully.

The ADL AI Index gives each “good” and “dangerous” examples from every of the chatbots for those who need to assessment them.

Earlier controversies round grok

Grok’s efficiency within the ADL research follows earlier controversies tied to the chatbot’s outputs on social media. In July 2025, Grok generated antisemitic content on X that included reward of Adolf Hitler and different offensive language, prompting backlash from the ADL and different advocacy teams. These posts have since been deleted.

On the time, xAI and the chatbot’s official account acknowledged the issue, saying they have been working to take away inappropriate posts and make enhancements. The ADL known as Grok’s habits “irresponsible, harmful and antisemitic, plain and easy.”

Elon Musk has beforehand addressed Grok’s problematic outputs, noting that sure responses have been being fastened following these incidents. Whereas these feedback weren’t a part of the ADL’s current research, they underscore ongoing challenges in aligning generative AI with sturdy security requirements.

Business and regulatory scrutiny

The ADL’s findings come amid broader concern over AI content material moderation. Consultants say that, with out sturdy security guardrails and bias mitigation, massive language fashions can inadvertently echo or amplify dangerous stereotypes and extremist rhetoric — a danger highlighted by each advocacy teams and regulators.

Along with security audit scrutiny, Musk’s AI platforms have confronted regulatory consideration over different points tied to dangerous outputs. For instance, the European Fee not too long ago opened an investigation into Grok’s technology of inappropriate and doubtlessly nonconsensual sexualized images, including to strain on builders to deal with content material dangers.

Backside line

With AI instruments more and more built-in into search, social media and productiveness workflows, belief and security stay prime considerations for builders and customers alike. The ADL’s report highlights that even main AI fashions differ extensively in how successfully they counter hate speech and dangerous narratives — and that ongoing enhancements are wanted throughout the business.

For builders like xAI and its opponents, these findings may affect future mannequin updates and business expectations round bias mitigation, contextual understanding and content material moderation requirements.

Observe Tom’s Guide on Google News and add us as a preferred source to get our up-to-date information, evaluation, and evaluations in your feeds.

Extra from Tom’s Information