Anthropic, the corporate behind Claude AI, has had a meteoric rise. Since its inception, it has gone from a little-known AI analysis firm to a world power, competing with the likes of OpenAI and Google.

Nevertheless, in a world the place AI is in every single place, in your smartphone, in your fridge and in your kid’s toys, Anthropic is surprisingly lowkey in its improvement model. It doesn’t make AI image or video generators and Claude, its very personal chatbot, is about as minimal as they arrive, missing in a lot of the bells and whistles seen in its rivals.

On top of that, Anthropic seems to be far more concerned about the future of AI than its competitors. Dario Amodei, the company’s CEO has been openly critical about AI and its dangers and the corporate as a complete has spent years highlighting the dangers of AI.

Safety first

AI is inherently messy. Over the years, we’ve seen chatbots glitch out, adopt bizarre personalities and fall apart in front of our eyes. There has been a long list of troubling hallucinations and concerns over how well this technology can take on the roles it is being given. Anthropic seemingly wants to avoid this as much as possible.

“We’re trying to build safe, interpretable, steerable AI systems. These things are getting more and more powerful, and because they’re more powerful, they almost necessarily get more dangerous unless you put guardrails around them to make them safe intentionally,” Scott explained.

“We want to improve the frontier of intelligence, but do so in a way that is safe and interpretable. We almost necessarily need to innovate on safety in order to achieve our mission to deploy a transformative AI.”

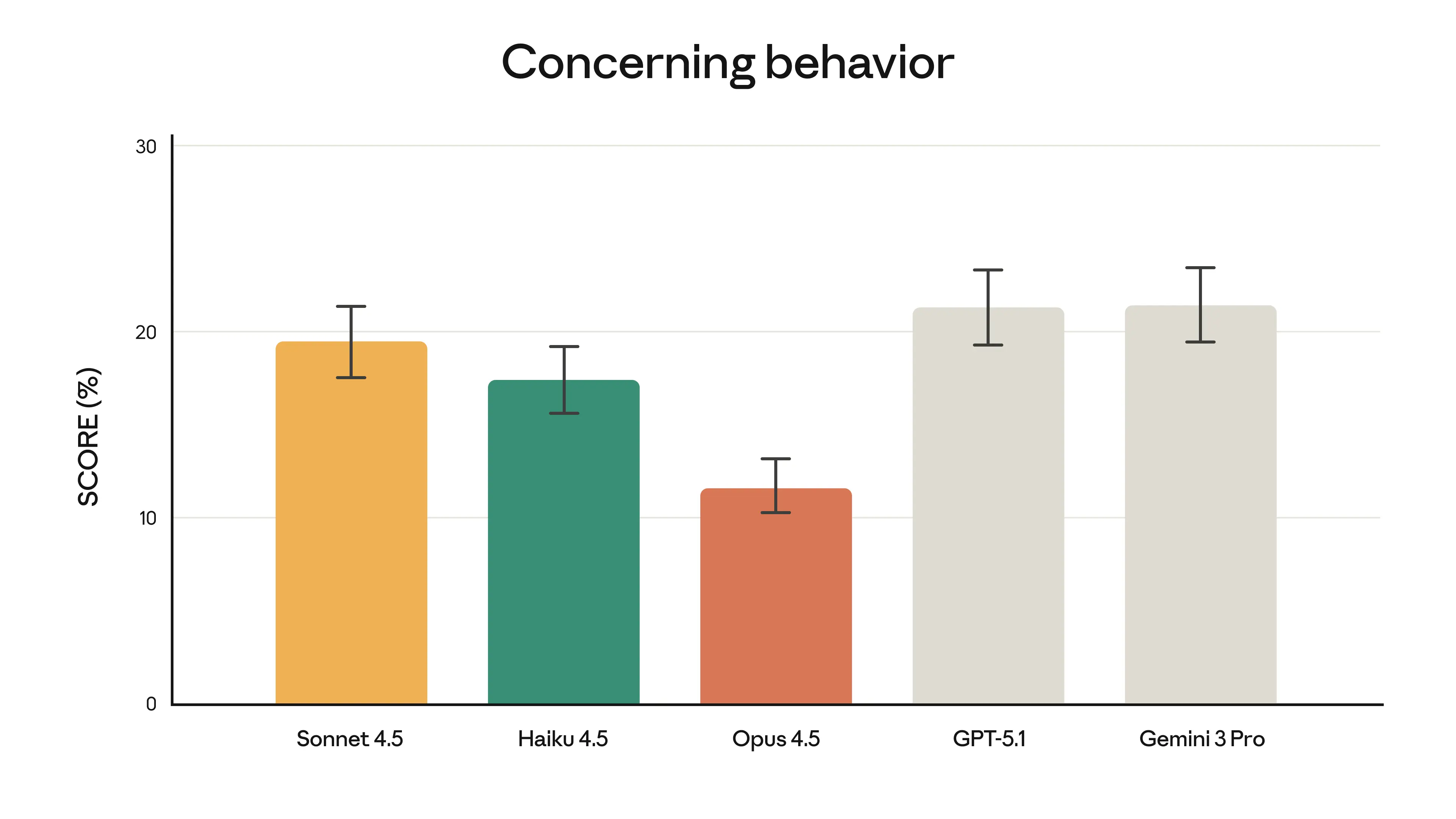

When Anthropic launched Claude Opus 4.5 on the finish of final 12 months, they claimed it was probably the most robustly aligned mannequin they’d launched, extra in a position to keep away from hackers, cybercriminals and malicious customers than its rivals.

In truth, throughout elements together with present harms, existential security, danger evaluation and extra, Anthropic was rated the best AI mannequin for safety in the Safety Index, narrowly outperforming OpenAI.

In current instances, the issues of AI haven’t simply been round how steerable AI is. Firms like Grok and ChatGPT have been accused of being overly agreeable or too prepared to push customers in sure instructions.

When requested if that is one thing the workforce considers when designing secure AI, White didn’t remark too deeply, however agreed it was one thing the workforce considered within the design course of.

White then went on to clarify {that a} precedence round security shouldn’t be seen as one thing holding them again, however as an alternative a constructive.

“Security is simply a part of ensuring that we construct highly effective issues. We don’t want to think about security as a trade-off, it’s only a needed requirement and you are able to do it and present everybody that it’s attainable. That’s what’s most necessary to me, to point out that security isn’t a limitation.”

Browsers and agents

In recent months, we’ve seen AI explode out of its usual confines. Where it was limited to chatbots and small tools, some of the bigger companies have created their own browsers, extensions and plugins.

OpenAI launched its Atlas browser, Gemini has built-in its expertise into the likes of Gmail and Google Calendar and for Claude, it has created its personal browser extension.

With the launch of those instruments got here new safety dangers. OpenAI’s Atlas got here beneath fireplace, together with Perplexity’s Comet for the danger of immediate injections. That is the place somebody hides malicious directions for an AI inside an online web page or textual content that it’s interacting with. When the AI reads this, it should routinely run the immediate.

Outdoors of the confines of chatbots, the place the AI couldn’t work together with the surface world, they have been lots safer, however danger comes after they start to work together extra with the web, taking on sure controls or being given entry to internet pages.

“Within the final couple of months, after we have been constructing the Claude browser extension, which permits Claude to regulate your browser and take actions by itself, we found that there have been novel immediate injection dangers,” White defined.

“As a substitute of launching it to all people, we launched it to a really small set of experimental customers who understood the dangers, permitting us to check the expertise earlier than it was launched extra extensively.”

Whereas Claude’s browser extension has proved profitable, it’s much more restricted in what it could do in comparison with the likes of CoPilot or browsers from each Perplexity and OpenAI. When requested whether or not Claude would ever develop one thing just like a browser, White was hesitant.

“Claude began out as a extremely easy chatbot, and now it’s extra just like a extremely succesful collaborator that may work together with all of those completely different instruments and data bases. These are vital duties that will take an individual many hours to do themselves,” White mentioned.

“However by accessing all of those programs, it does add new danger vectors. However as an alternative of claiming we’re not going to do one thing new, we’re going to unravel for these vectors. We’re going to proceed to analyze new methods of utilizing Claude and new methods of it taking stuff off your fingers, however we’re going to do it safely.”

The bells and whistles

Claude has had a laser focus on its goals. Unlike its competitors, there has been no time for image or video generation, and a seeming lack of interest in any feature that doesn’t provide practical use-cases.

“We’ve prioritized areas that can help Claude advance people and push their thinking forward, helping them solve bigger meatier challenges and that has just been the most critical path for us,” said White.

“We’re really just trying to create safe AGI, and the reality is that the path to safe AGI is solving really hard problems, often in professional contexts, but also in personal situations. So we’re working on faster speeds, better optimization and safer models.”

Talking about how he sees the role of Claude, White explained that he wanted Claude to be a genuinely useful tool that could eliminate the problems in your life. This, in the future, he hoped would reach the point of a tool that could handle some of life’s biggest challenges, freeing up hours of our day for other things.

It’s not just features like image generators; Anthropic feels like an overall more serious company than its competitors. It’s time and effort is spent on research, it is less interested in appealing to the wider consumer market and the tools it focuses on are dedicated to coding, workflows and productivity.

Comply with Tom’s Guide on Google News and add us as a preferred source to get our up-to-date information, evaluation, and opinions in your feeds.

Extra from Tom’s Information

Again to Laptops