After I faucet the app for Anthropic’s Claude AI on my telephone and provides it a immediate — say, “Inform me a narrative a few mischievous cat” — loads occurs earlier than the end result (The Nice Tuna Heist) seems on my display screen.

My request will get despatched to the cloud — a pc in a big data center someplace — to be run via Claude’s Sonnet 4.5 large language model. The mannequin assembles a believable response utilizing superior predictive textual content, drawing on the large quantity of information it has been educated on. That response is then routed again to my iPhone, showing phrase by phrase, line by line, on my display screen. It is traveled lots of, if not hundreds, of miles and handed via a number of computer systems on its journey to and from my little telephone. And all of it occurs in seconds.

Learn extra: CNET Is Choosing the Best of CES 2026 Awards

This method works nicely if what you are doing is low-stakes and pace is not actually a difficulty. I can wait just a few seconds for my little story about Whiskers and his misadventure in a kitchen cupboard. However not each activity for synthetic intelligence is like that. Some require great pace. If an AI gadget goes to alert somebody to an object blocking their path, it may possibly’t afford to attend a second or two.

Different requests require extra privateness. I do not care if the cat story passes via dozens of computer systems owned by individuals and firms I do not know and will not belief. However what about my well being data, or my monetary knowledge? I would need to maintain a tighter lid on that.

Do not miss any of our unbiased tech content material and lab-based critiques. Add CNET as a most well-liked Google supply.

Velocity and privateness are two main the reason why tech builders are more and more shifting AI processing away from large company knowledge facilities and onto private units akin to your telephone, laptop computer or smartwatch. There are value financial savings too: There isn’t any have to pay an enormous knowledge heart operator. Plus, on-device fashions can work with out an web connection.

However making this shift potential requires higher {hardware} and extra environment friendly — typically extra specialised — AI fashions. The convergence of these two components will finally form how briskly and seamless your expertise is on units like your telephone.

Mahadev Satyanarayanan, referred to as Satya, is a professor of pc science at Carnegie Mellon College. He is lengthy researched what’s referred to as edge computing — the idea of dealing with knowledge processing and storage as shut as potential to the precise person. He says the perfect mannequin for true edge computing is the human mind, which does not offload duties like imaginative and prescient, recognition, speech or intelligence to any type of “cloud.” All of it occurs proper there, utterly “on-device.”

“Here is the catch: It took nature a billion years to evolve us,” he instructed me. “We do not have a billion years to attend. We’re attempting to do that in 5 years or 10 years, at most. How are we going to hurry up evolution?”

You pace it up with higher, sooner, smaller AI working on higher, sooner, smaller {hardware}. And as we’re already seeing with the most recent apps and units — together with these we saw at CES 2026 — it is nicely underway.

AI might be working in your telephone proper now

On-device AI is way from novel. Keep in mind in 2017 when you can first unlock your iPhone by holding it in front of your face? That face recognition know-how used an on-device neural engine – it isn’t gen AI like Claude or ChatGPT, however it’s elementary synthetic intelligence.

Right now’s iPhones use a way more highly effective and versatile on-device AI mannequin. It has about 3 billion parameters — the person calculations of weight given to a chance in a language mannequin. That is comparatively small in comparison with the massive general-purpose fashions most AI chatbots run on. Deepseek-R1, for instance, has 671 billion parameters. Nevertheless it’s not supposed to do all the pieces. As a substitute, it is constructed for particular on-device duties akin to summarizing messages. Similar to facial recognition know-how to unlock your telephone, that is one thing that may’t afford to depend on an web connection to run off a mannequin within the cloud.

Apple has boosted its on-device AI capabilities — dubbed Apple Intelligence — to incorporate visible recognition options, like letting you look up things you took a screenshot of.

On-device AI fashions are in every single place. Google’s Pixel telephones run the corporate’s Gemini Nano mannequin on its customized Tensor G5 chip. That mannequin powers options akin to Magic Cue, which surfaces data out of your emails, messages and extra — proper while you want it — with out you having to seek for it manually.

Builders of telephones, laptops, tablets and the {hardware} inside them are constructing units with AI in thoughts. Nevertheless it goes past these. Take into consideration the good watches and glasses, which provide way more restricted area than even the thinnest telephone?

“The system challenges are very totally different,” stated Vinesh Sukumar, head of generative AI and machine studying at Qualcomm. “Can I do all of it on all units?”

Proper now, the reply is normally no. The answer is pretty simple. When a request exceeds the mannequin’s capabilities, it offloads the duty to a cloud-based mannequin. However relying on how that handoff is managed, it may possibly undermine one of many key advantages of on-device AI: holding your knowledge completely in your palms.

Extra non-public and safe AI

Consultants repeatedly level to privateness and safety as key benefits of on-device AI. In a cloud state of affairs, knowledge is flying each which means and faces extra moments of vulnerability. If it stays on an encrypted telephone or laptop computer drive, it is a lot simpler to safe.

The information employed by your units’ AI fashions might embody issues like your preferences, shopping historical past or location data. Whereas all of that’s important for AI to personalize your expertise based mostly in your preferences, it is also the type of data it’s possible you’ll not need falling into the mistaken palms.

“What we’re pushing for is to verify the person has entry and is the only real proprietor of that knowledge,” Sukumar stated.

Apple Intelligence gave Siri a brand new look on the iPhone.

There are just a few alternative ways offloading data might be dealt with to guard your privateness. One key issue is that you just’d have to provide permission for it to occur. Sukumar stated Qualcomm’s purpose is to make sure individuals are knowledgeable and have the flexibility to say no when a mannequin reaches the purpose of offloading to the cloud.

One other strategy — and one that may work alongside requiring person permission — is to make sure that any knowledge despatched to the cloud is dealt with securely, briefly and quickly. Apple, for instance, makes use of know-how it calls Private Cloud Compute. Offloaded knowledge is processed solely on Apple’s personal servers, solely the minimal knowledge wanted for the duty is distributed and none of it’s saved or made accessible to Apple.

AI with out the AI value

AI fashions that run on units include a bonus for each app builders and customers in that the continuing value of working them is mainly nothing. There isn’t any cloud companies firm to pay for the power and computing energy. It is all in your telephone. Your pocket is the info heart.

That is what drew Charlie Chapman, developer of a noise machine app referred to as Dark Noise, to utilizing Apple’s Basis Fashions Framework for a software that permits you to create a mixture of sounds. The on-device AI mannequin is not producing new audio, simply deciding on totally different present sounds and quantity ranges to make one combine.

As a result of the AI is working on-device, there is not any ongoing value as you make your mixes. For a small developer like Chapman, which means there’s much less threat hooked up to the dimensions of his app’s person base. “If some influencer randomly posted about it and I received an unbelievable quantity of free customers, it doesn’t suggest I will instantly go bankrupt,” Chapman stated.

Learn extra: AI Essentials: 29 Ways You Can Make Gen AI Work for You, According to Our Experts

On-device AI’s lack of ongoing prices permits small, repetitive duties like knowledge entry to be automated with out large prices or computing contracts, Chapman stated. The draw back is that the on-device fashions differ based mostly on the gadget, so builders must do much more work to make sure their apps work on totally different {hardware}.

The extra AI duties are dealt with on client units, the much less AI firms must spend on the large knowledge heart buildout that has each main tech firm scrambling for money and pc chips. “The infrastructure value is so large,” Sukumar stated. “In the event you actually need to drive scale, you don’t want to push that burden of value.”

For creators utilizing AI for video or picture modifying, working these fashions by yourself {hardware} has the good thing about avoiding costly cloud-based subscription or utilization fees. At CES. we noticed how dedicated computers or specialized devices, just like the Nvidia DGX Spark, can energy intensive video technology fashions like Lightricks-2.

The long run is all about pace

Particularly in the case of features on units like glasses, watches and telephones, a lot of the real usefulness of AI and machine studying is not just like the chatbot I used to make a cat story initially of this text. It is issues like object recognition, navigation and translation. These require extra specialised fashions and {hardware} — however additionally they require extra pace.

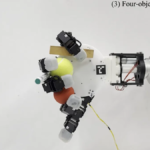

Satya, the Carnegie Mellon professor, has been researching totally different makes use of of AI fashions and whether or not they can work precisely and rapidly sufficient utilizing on-device fashions. In terms of object picture classification, immediately’s know-how is doing fairly nicely — it is capable of ship correct outcomes inside 100 milliseconds. “5 years in the past, we have been nowhere capable of get that type of accuracy and pace,” he stated.

This cropped screenshot of video footage captured with the Oakley Meta Vanguard AI glasses reveals exercise metrics pulled from the paired Garmin watch.

However for 4 different duties — object detection, instantaneous segmentation (the flexibility to acknowledge objects and their form), exercise recognition and object monitoring — units nonetheless want to dump to a extra highly effective pc elsewhere.

“I believe within the subsequent variety of years, 5 years or so, it may be very thrilling as {hardware} distributors maintain attempting to make cell units higher tuned for AI,” Satya stated. “On the similar time we even have AI algorithms themselves getting extra highly effective, extra correct and extra compute-intensive.”

The alternatives are immense. Satya stated units sooner or later may give you the option use pc imaginative and prescient to provide you with a warning earlier than you journey on uneven cost or remind you who you are speaking to and supply context round your previous communications with them. These sorts of issues would require extra specialised AI and extra specialised {hardware}.

“These are going to emerge,” Satya stated. “We are able to see them on the horizon, however they don’t seem to be right here but.”