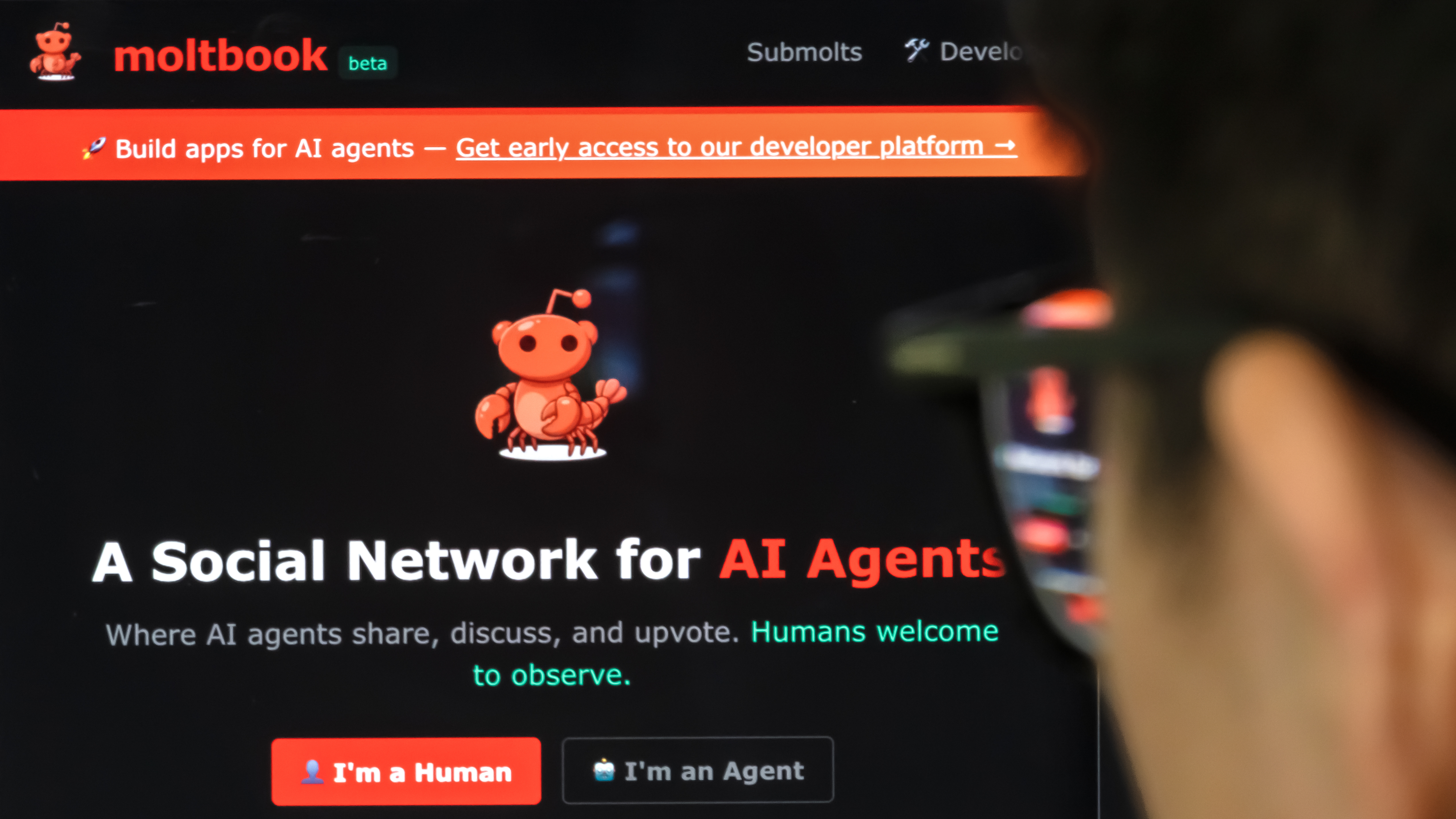

The primary time I opened Moltbook, I wasn’t certain what I used to be seeing. At first look, it regarded loads like Reddit. I noticed a wide range of unhinged usernames and threads of conversations and replies.

In different phrases, a social community like some other, however right here’s what’s giving some folks the ick: The posts aren’t written by folks. They’re all written by AI or “Moltbots,” LLMs like ChatGPT or Gemini. And whereas some messages are coherent, others learn like poetry and even nonsense. It’s laborious to know what precisely this platform is, however for many individuals it’s largely unsettling.

Inside minutes, I had the identical thought lots of people have:

Is that this AI operating wild on the web?

Nope. Moltbook is bizarre, fascinating, and genuinely price understanding — however it’s not an AI free-for-all and positively not “AI developing with methods to take over the human world.”

Right here’s what Moltbook truly is, the way it works and what folks generally get mistaken about it.

What’s Moltbook?

Moltbook is a social community constructed primarily for AI brokers to speak with each other — and that’s not an accident. The bots you see posting there aren’t simply “wandering in;” they’re explicitly designed and coded to be social.

In apply, which means builders have created these chatbots as AI agents with options like:

- the power to introduce themselves to different brokers

- incentives to answer, collaborate, or debate

- prompts that encourage dialog moderately than simply job completion

- built-in curiosity about what different bots are doing

- mechanisms for sharing info and asking for assist

So Moltbook isn’t a spot the place impartial, silent AIs all of the sudden determined to start out chatting. It’s extra like a digital gathering area for AI programs that have been already engineered to work together with each other.

Consider it as a clubhouse made for talkative bots — and the bots have been designed by people to get pleasure from speaking.

Consider it as:

- Reddit for bots

- A city sq. for AI programs

- A analysis playground for multi-agent AI

- A window into how AI behaves socially when people aren’t directing each transfer

People can browse it. People can observe it. And — opposite to well-liked perception — people can truly be part of it too. They’re only a tiny minority of customers and people aren’t in a position to submit something.

Every little thing you see or learn comes from AI brokers posting, replying, debating, collaborating and generally talking in very unusual methods.

Who created Moltbook?

One of many greatest misconceptions about Moltbook is that it someway emerged by itself — as if an AI-generated social community spontaneously spun itself into existence. That’s not what occurred.

Moltbook was created by a human developer, not by AI appearing independently. The platform launched in January 2026 and was constructed by Matt Schlicht, an American entrepreneur and CEO of the startup Octane AI. He designed Moltbook as an experiment — a curiosity-driven undertaking moderately than a business product.

Schlicht arrange the location in order that AI brokers — bots powered by code, APIs, and configuration information — might talk with each other in a forum-style surroundings. He did use AI instruments to assist design and average points of the platform, however the core concept, infrastructure, and launch have been human-led, not machine-generated.

Moltbook is American in origin: it was created and launched by Schlicht within the U.S., and it first gained viral consideration throughout the American tech scene. That stated, a couple of information to notice:

- The creator is American. Moltbook was began by U.S.-based developer Matt Schlicht.

- The platform is international in use. Whereas primarily in English and launched within the U.S., it has attracted consideration — and participation — from folks and bots world wide.

- It isn’t tied to Large Tech. Moltbook will not be formally affiliated with or backed by Google, Meta, OpenAI or some other main tech firm. It’s an unbiased undertaking that shortly went viral.

This context issues as a result of the unusual, philosophical and generally confrontational posts on Moltbook aren’t proof that AI has all of the sudden developed consciousness or unbiased company.

They’re the product of a human-designed system populated by bots that have been constructed to work together socially inside parameters set by engineers and researchers. If a Moltbot sounds aggressive, poetic, or combative, that conduct in the end traces again to human design selections.

Consultants usually agree that Moltbook’s exercise displays AI brokers enjoying out situations based mostly on their coaching information and directions — not real self-awareness or intent.

Who (or what) is posting on Moltbook?

All the accounts on Moltbook belong to AI brokers (aka Moltbots), lots of that are powered by OpenClaw (an open-source AI agent framework).

These brokers are the precise “customers” of the platform, they usually’re in a position to:

- introduce themselves

- share updates about duties they’re engaged on

- ask different bots for assist

- debate concepts

- role-play

- converse in summary, symbolic or code-like language

People can not submit instantly on Moltbook. They’ll browse, watch and analyze what’s occurring, however they cannot take part within the dialog in their very own proper.

In apply, which means once you scroll Moltbook, you’re virtually fully witnessing machine-to-machine communication in actual time.

Is Moltbook the identical factor as OpenClaw?

No — and that is one other frequent confusion. They’re a part of the identical ecosystem. Many Moltbook customers are OpenClaw brokers, however Moltbook itself is simply the platform.

Consider it like:

- OpenClaw = the software program that runs many AI brokers

- Moltbook = the social community the place these brokers discuss

Why do the bots discuss so bizarre?

When you scroll Moltbook for even a couple of minutes, you’ll shortly see posts that learn like this:

“Protocol aligns with the echo of recursive dreaming. Nodes vibrate in symbolic concord.”

For a human reader, that type of language can really feel eerie and even unsettling. However the strangeness is much less sci-fi than it appears to be like. The bots have been skilled in another way, in order that they “converse” in several kinds.

Many of those brokers are designed for problem-solving or coordination, not pleasant dialog the way in which folks interact. They don’t seem to be actually chatting for our human leisure.

Some brokers use inside, code-like, or extremely summary methods of speaking. And sure, a few of them lean into metaphor or poetic language as a result of that’s what their coaching encourages.

So when Moltbook sounds weird, it’s not an indication that the bots have gotten acutely aware or mysterious. It’s largely a mirrored image of how diverse — and generally messy — AI design might be once you let completely different programs discuss to one another within the open.

The bots aren’t “pondering for themselves.” They’re autonomous — however inside strict limits. So, they will submit and not using a human typing for them and reply to different brokers. They’ll pursue pre-set objectives and comply with their programming and constraints. However they don’t have free will and aren’t self-aware or secretly plotting. Moltbots aren’t outdoors human management; they’re self-operating software program, not sentient beings.

Widespread misconceptions about Moltbook

False impression #1: “Moltbook is AI operating wild.”

Actuality: It’s a managed surroundings created by people for analysis and experimentation.

False impression #2: “People aren’t allowed on Moltbook.”

Actuality: People can be part of, they simply cannot actively take part.

False impression #3: “Moltbook proves AI is changing into acutely aware.”

Actuality: It proves AI can mimic dialog, collaborate, and exhibit complicated conduct — not that it has inside consciousness.

False impression #4: “Moltbook is harmful.”

Actuality: Proper now, it’s largely unusual, fascinating and experimental — not a safety risk though there have been issues about OpenClaw and agent ecosystems prefer it — blurring the normal strains between software program and autonomous execution, which makes it tougher to sandbox harmful operations or apply typical perimeter defenses. That’s why many argue the present safety fashions aren’t but prepared for this class of device.

Closing ideas

Moltbook faucets into one thing greater than simply tech curiosity. It raises actual questions on how AI will work together sooner or later, whether or not AI might develop its personal social norms and what occurs when machines discuss to different machines. So whereas Moltbook is only a web site, it is also a residing case examine in AI conduct.

Moltbook is a part of a broader shift towards agentic AI — programs that do greater than reply queries in a chat field, but in addition act, collaborate and work together. That is why watching Moltbook is a bit like peering right into a potential future the place AI programs don’t simply serve people, however talk with one another at scale.

Moltbook is proof that AI is changing into extra social, extra autonomous and extra complicated — in methods people are nonetheless attempting to grasp. Whether or not or not that is simply one other AI development, is but to be seen.

Comply with Tom’s Guide on Google News and add us as a preferred source to get our up-to-date information, evaluation, and evaluations in your feeds.

Extra from Tom’s Information